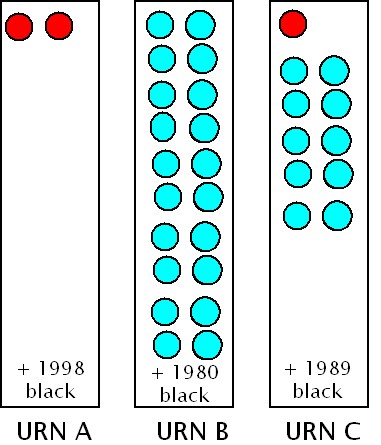

In front of you lie three urns, labeled A, B and C. Each contains 2000 balls. Urn A has 2 reds and the rest black; Urn B has 20 blue and the rest black; Urn C contains 1 red, 10 blue and the rest black. Like so:

You can reach into the urn of your choice and remove a ball. If you draw red, you get $1000; if you draw blue, you get $100; if you draw black, you get nothing. Which urn do you pick and why?

Note that the urns are rigged so that on average (or, in economic jargon, “in expectation”), you’ll win exactly $1 regardless of the urn, but of course it’s perfectly normal to care about things other than what you’ll win in expectation. So it’s perfectly reasonable to prefer one urn to another.

Post your answers, and in a day or two I’ll post my commentary.

Hat tip to my student Tallis Moore. I won’t tell you now where he got this, to make it marginally harder to Google the standard analysis. Further hat tips forthcoming later in the week.

Note: All men’s groups protesting the insensitivity of a post about “removing balls” will be cheerfully ignored.

Lean towards option B, on the grounds of lower variance. This matches my intuition that the first $100 does(marginally) more for me than the 2nd $100 (being marginally richer). This seems standard enough that I’m going to assume steve has something more interesting in mind.

I wonder if Steve is going to argue that there’s no rational reason to choose C? I wonder also if he’s going to bring up some current event where someone chose something analogous to C?

If money has diminishing marginal utility to me, I should pick B.

It is also possible that $1000 is useful to me to buy some item that I really want and can’t buy with $100, but my pockets are empty and my only chance of buying this item is from the urn prize. If so, then each dollar doesn’t actually have diminishing marginal utility. The dollars have diminishing marginal utility until they get to dollar 999, whereupon the 1000th dollar has a spike; if it’s high enough, I should pick A.

If I also have some money in my pocket, the spike happens at a lower number. If I have some chance of having more money but I don’t know exactly how much (maybe I have to buy lunch but don’t know how much change I’ll have left), the spike smooths out into a bump.

Right, it is irrational to pick C unless you are indifferent between $100 and a 0.1 probability of winning $1000, in which case it doesn’t matter which urn you pick.

I think it’s easy to show that there’s no rational basis for choosing C.

Consider two further “semi-urn” types:

X = 1 red 999 black

Y = 10 blue 990 black

So A can be represented by a pair of Xs, B can be represented by a pair of Ys, and C is one X and one Y.

So choosing a ball from one of the original urns is exactly equivalent to taking the corresponding pair of semi-urns, flipping a coin, and drawing from the left urn if you get heads and from the right urn if you get tails.

Choice C is saying you’d prefer to draw from an X urn if you get heads and a Y urn if you get tails, which makes no rational sense.

I choose C.

Personally, $100 doesn’t mean anything to me. I’m so middle class that my marginal return on $100 is nearly zero. But $1000: I can blow that on some nonsense my wife would normally balk at.

But my wife says $100 is $100 and I shouldn’t be such an idiot. I try to tell her that it’s still a “$1 draw”, but she carefully explains to me that while the marginal utility of $100 is smaller for us than for some households, it isn’t zero. She draws me curves and everything.

In the end, we decide that, for our household, we have disparate preferences, and we choose to reveal them by selecting C.

Multiple rational actors have more rational outcomes than unitary rational actors. They also tend to have more local maxima when goal-seeking via hill climbing, which can make changing a non-optimal decision more expensive than expected.

If we disregard transaction cost for trading, then I think the pick is A. I can sell my pick to a gambler, who would value it at slightly higher than $1.

On first inspection, there are two kinds of ways this could appeal to a person. You might like a small chance (1 in 1000) of winning $1000, where $100 won’t make much difference to your life, but $1000 would. That person is likely to choose A. Or you might like a 1 in 100 chance of getting $100, in which case you’re likely to choose B.

Urn C perhaps won’t appeal to either – because those that most want $1000 have better odds with A, and those who’d prefer a better chance of $100 have a better chance with B.

Each choice is rational depending on what you’re looking for.

Choice A gives the best chance of winning $1000.

Choice B gives the best chance of winning something.

Choice C gives the best chance of winning something while still

allowing you the possibility of winning $1000.

@Bennett

“Choice C is saying you’d prefer to draw from an X urn if you get heads and a Y urn if you get tails, which makes no rational sense.”

Not quite. Choice C is saying you would prefer a procedure whereby you toss a coin with the rule that if you get tails you draw from urn x and if you get tails you draw from urn Y. This is not quite the same thing and not obviously irrational (to me at least).

Urn B because I get a significant value from winning, per se, and urn B maximizes the chance of that happening.

If the expected utility for A is larger than B, then the expected utility for A will be larger than C.

If the expected utility for B is larger than A, then the expected utility for B will be larger than C.

Other than all expected utilities being equal, there is no reason to prefer C over both A and B if your objective is to maximize expected utility.

Proof:

(u.pA is the dot product of the 3-element utility vector u with the 3-element probability vector pA for urn A, therefore u.pA is the expected utility for urn A. Same for urns B and C.)

1. pC = (pA + pB)/2

2. u.pA – u.pC = (u.pA – u.pB)/2

3. u.pB – u.pC = (u.pB – u.pA)/2

Preferring C over A and preferring C over B would be a contradiction.

If you prefer C over A, then you must also prefer B over A.

If you prefer C over B, then you must also prefer A over B.

Lets says you live in a world with two goods: picking from urn A and picking from urn B. Let’s say you can play the game once a day, at most. Over the long term, I think there’s an implicit indifference curve between these two goods and there’s a “budget” constraint consisting of a fixed number of times you can play during a given time (since you can play at most once a day). Given such a budget constraint and such an indifference curve, over the long run there’s an optimal marginal rate of substitution at which the player likes to play. Picking from urn C seems to be just taking the this long term mixed preference and expressing it as an option in a single game. I pick urn C.

Don’t people tend to like variety more than a lack thereof?

First Steve, that was a good line about removing balls.

Assuming a 1 time pick only, personally, I’d probably pick B because it gives me the best odds of winning something (and I paid nothing to play). I can see someone picking A if a $1000 makes a difference that $100 wouldnt. Unlike some above, I could also see picking C as kind of a hybrid between still having some of the thrill of possibility winning $1000 and while retaining some of the higher odds of winning — sort of like having a piece of high risk high reward in your investment portfolio…

I’m sure there’s a deeper answer than that.

Interesting comment from Ken Arromdee.

My choice is Urn B, because it has the fewest number of black balls, so I stand the highest chance of winning *something* regardless of the actual value of the winnings.

Oddly enough, this would seem to suggest that I derive more value from “winning a draw” than I do from the prize itself. Kind of revealing about my personal psychology, isn’t it? :D

@Jonathan Kariv, I agree to the extent that when all three have the same expected return, a risk-averse utility maximizer would choose the one that minimizes the variance. However, I would go one further though, the one with the lowest variance in this case also has the least bad conditional Value at Risk (CVaR) at the 99.x% level (i.e. the average return conditional on being below the 99% quantile is 0 for all, but between 99.0% and 99.5% then the low variance has a positive return and the others are all returning zero). Nevertheless, it is possible to have utility on both the downside and upside CVaR. The downside CVaR wouldn’t change much at 99.5% between the different urns, but the upside CVaR is highest for the urn with the two red balls at the 99.5% level. So I can conceive of someone who does not particularly mind the difference in the downside CVaR, but really favors a greater upside CVaR. It really comes down to preferences.

Building on Bennett’s post (5) . . .

Suppose you have a 1/2 probability of entering one of two rooms:

Room 1 contains Urn A

Room 2 contains a choice between Urn A and Urn B

If you prefer Urn A over Urn B, then you will choose from Urn A regardless of the room you enter.

If you prefer Urn B over Urn A, then you will choose from Urn B with probability 1/2 and you will choose from Urn A with probability 1/2. This is the same as choosing from Urn C. Therefore, preferring Urn A over Urn B is the same as preferring Urn A over Urn C.

Likewise, preferring Urn B over Urn A is the same as preferring Urn B over Urn C.

This doesn’t use expected utility, just your preference of Urn A vs Urn B.

(This line of reasoning–if correct–is due to the things I’ve learned by following this informative blog for a few years. If incorrect, it is all on me.)

Can I run it twice?

To me, this looks like our lottery system here in the U.S. The equivalent problem is that someone gives me $1, with the caveat that I have to us the money to buy a lottery ticket. I can buy a Powerball ticket, which has a very low probability of winning a very large payout (Urn A). Or I can buy an Instant Win ticket, which has a higher (sometimes 1 in 10) probability of winning a small payout (Urn B).

The odd thing is that the Powerball has lower payouts too, and what ThomasBayes and Bennett Haselton have shown is that these lower payouts make no sense, as they are the equivalent of Urn C.

Of course, buying lottery tickets is an irrational act to begin with, so we should not expect people buying them to behave rationally.

@John/#16: I admit I needed to look up Conditional value at risk. Yay learning things :-). Point is taken that A vs B depends entirely on your utility function. Suppose I was assume a smooth, positive 1st derivative, negetive 2nd derivative utility function, and yeah there isn’t actually a reason to assume it’s even continuous.

As everyone else pointed out, yeah C seems like it’s bang in the middle of A and B so it should be noone’s first choice, barring a 3 way tie.

“All men’s groups protesting the insensitivity of a post about “removing balls” will be cheerfully ignored.”

Ahhh nuts.

C does not make sense as per post #12.

The A or B choice is up to the personal preference. People who buy lottery tickets should prefer A, proof: they typically accept negative return in exchange for a chance to win “huge” amount of money. 1000 is moderately “huge”, in any case more “huge” than 100. People who do not buy lottery tickets and who are rational should find A and B the same. There may be subtleties of course, like in the case of people whom 100 equally as 1000 would save from starving to death etc.

A if you are risk loving.

B if you are risk adverse.

A risk neutral person would be indifferent among the urns.

But I’d wager that our good host has something else in mind or he would not have asked the question.

I can think if a scenario where the utility function is a constant, representing the thrill of winning, plus a convex function of the winnings. Don’t have the head (or the paper) for it now, but if the numbers are picke correctly, C can be the best choice.

In my mind I have changed the amounts: firstly, suppose red balls $1 billion and blue balls $100 million. In this scenario I’d definitely pick urn B because I’d want as much chance to win $100 million as possible.

Secondly, suppose red balls are $10 and blue balls $1. In this case I don’t really care about winning $1 but may as well take a punt on winning $10 so will chose urn A.

In scenario 1 I am risk averse and pick urn B. In scenario two I am risk loving and pick urn A. Therefore I would only pick urn C if I am risk neutral and this would only happen at exactly the right values for the red and blue balls. (In my mind $1000 and $100 seem about right!) However if I am risk neutral I am just as likely to pick urns A and B as urn C.

K, got to a piece of paper, and adding a constrant thrill of winning does not help.

Let the utility of the thrill of winning c. Let the utility of $100 be U(100), and the utility of $1000 be U(1000). For C to be the best choice, we need:

11 * c + U(1000) + 10 * U(100) > 20 * c + 20 * U(100)

and

11 * c + U(1000) + 10 * U(100) > 2 * c + 2 * U(1000)

the first inequality implies:

c < ( U(1000) – 10 * U(100) ) / 9

the second inequality implies:

c 10 * U(100)

This translates into the player being risk-seeking. This is intualive, as we’ve given a politive value to the thrill of winning.

I think I would walk away. It would be too hard not to get my hopes up and feel worse off when I lost than if I had never drawn at all.

Screw that. Website cut off half my comment.

Is there a general result like this?

—

Suppose p_1, p_2, p_3, . . . p_N are all probability mass functions for some set of K possible outcomes, and suppose that when asked to pick among the N options corresponding to the probability functions, you pick the one corresponding to p_n.

If you are presented with an additional option that has the probability mass function

p = a1*p_1 + a2*p_2 + . . . + aN*p_N,

where all the coefficients (a1, a2, …, aN) are nonnegative and sum to one, then you should still pick the option with the probability mass function p_n. To do otherwise would cause a contradiction with yourself (unless you consider more than one of the original options to be equally good, and p is a linear combination of those options).

—

If so, is it a well-known result with a reference?

I think number 12 is right, but only if you assume basically linear utility. If you assume that most of a person’s “big prize utility” comes from the first chance at $1000, and not the second, and you assume that most of the person’s “better chances utility” comes from the first few extra chances, and not the later ones, option C becomes a rational choice.

This discussion is very boring.

High time for Steve to come back and explain to us whatever surprising insight he has into this seemingly mundane problem.

Twofer (29): What do you think about comment (17)? That one doesn’t rely on any utility function, but still demonstrates that a preference of A over B implies a preference of A over C, and, likewise, a preference of B over A implies a preference of B over C.

If you agree with the logic of comment 17, then C can only be preferred if there is no preferred choice between A and B (they are considered equally good).

@Joel #25, simplifying your expressions, I get

U(1000) > 9*c + 10*U(100)

and

9*c + 10*U(100) > U(1000)

combining the two:

9*c + 10*U(100) > 9*c + 10*U(100)

which is impossible. I agree that the thrill of winning doesn’t help.

If “rational” decision-making does not preclude emotional utility of potential results (and I suppose “utility” is inherently emotional) or of the process itself, then all bets are off, so to speak. The relative proportions of emotional benefit (utility) of playing a given urn and/or hoping for a given potential payout may be entirely subjective/individualized, and the same is true for the emotional utility of winning one given amount vs. another.

As just one example, suppose a fourth urn, D, has one blue ball in it and no other balls, and I’m informed of this fact. And suppose I have to pay $2 to play (Yes, this introduces a sort of transaction cost that perhaps shouldn’t be assumed, but that’s beside the point). Well, I certainly won’t play to choose D. But I might play for one of the others (particularly one time rather than committing myself to play a large number of times) despite the negative expected value in dollar terms, for the fun of the process (i.e., gambling for fun, fully cognizant of negative expected value) and/or because the emotional utility of winning $100 or $1,000 is sufficiently high for me to offset the downside.

I used to think it was irrational (and moronic and pathetic) for anyone to buy lottery tickets. Now I realize that, at least for many, they are getting something worthwhile for their money even (or particularly) when they play on an ongoing basis: maintenance of a dream of being super-rich that they otherwise wouldn’t be able to maintain (or realistically pursue). They get utility from playing and from the hope/dream of winning and the feelings that hope gives them, as opposed to conceding that they absolutely will never be super-rich (ok, there’s never literally zero chance, but playing the lottery may actually provide them many multiples of the chance they’d otherwise have, notwithstanding the extremely low odds of the lottery).

What about men’s groups protesting blue balls? Let’s see you cheerfully ignore THAT.

HEY – Landsburg said NO comment about cutting things off!

I suspect if you are operating a gambling game (slots comes to mind), that option C is the most successful strategy to incentivize play as the players get a chance for 1000X return AND 100X return, even though expected values are the same.

VCs are in the business of picking Option 2 with 25 payoff balls

@nobody.really #34,

I particularly resent the idea that blue balls can be urned. Talk about blaming the victim!

OOPS re: my #33,

I didn’t mean to say Urn D has a blue ball, but rather a new color ball with a payout of $1. And as I noted, one has to pay $2 to play.

If I understand what Steve L. has been teaching us about rationality, then the simple act of playing a lottery says nothing about a person’s rationality. Suppose, for instance, a person is faced with the following two options:

(Option A) Buy a $5 lottery ticket that returns $1 million + $5 with probability 1/10,000,000:

Pr($1M) = 10^{-7}

Pr(-$5) = 1 – 10^{-7}

Pr($0) = 0

(Option B) Don’t buy a lottery ticket:

Pr($1M) = 0

Pr(-$5) = 0

Pr($0) = 1

It could be rational to prefer Option A. HOWEVER, if you prefer Option A to Option B, then you should also prefer Option A to this:

(Option C) Buy a $5 lottery ticket that returns your $5 with probability 1/2 and returns $1M+$5 with probability 1/20,000,000:

Pr($1M) = (1/2)*10^{-7}

Pr(-$5) = (1/2)*(1 – 10^{-7})

Pr($0) = 1/2

That is, faced with these three options, a rational person would either buy no ticket, or buy the ticket in Option A. They should never buy the ticket in Option C.

Is this correct?

@ThomasBayes #39, and yet, if you gave the average lottery ticket buyer the choice of A, B, or C, I suspect many (most?) would choose C. This is of course largely because they average person doesn’t distinguish between odds of 1/10,000,000 and odds of 1/20,000,000. We know it’s rare, and don’t care how rare.

The actual odds posted for winning Powerball are 1/175,223,510. Its hard to think of an event that could occur more rarely than that – I am more likely to be killed by a shark this summer than to win.

I choose urn A for the reason that it holds the maximum upside potential if I happen to choose a “winning” ball, which is otherwise very unlikely in all cases.

I like to gamble; so I will further estimate how much I would pay to play each urn (one ball selection in each case).

Urn A = $1.15

Urn B = $1.05

Urn C = $1.10

The actual amounts aren’t so significant (I didn’t spend a lot of thought on that), but the order is. I haven’t considered well enough if the ratio among them is significant as well, but I’m sure it should be. In other words given enough thought I might refine my answers; however, what is consistent is that I’m willing to pay more for the bigger upside.

I choose C because I like doing things other people think are irrational.

I can believe there are logical arguments for why C would be inferior for many if not most people, but it also seems almost assured one could come up with a middle-ground utility function for a person who wants a decent chance of winning something while still preserving at least some chance at the big payout too. On #5 (and #17), what of C = 2 urns each with 1/2 X and 1/2 Y mixed together? Seems like you’ve rigged it to set up an irrational choice.

I’ve now convinced myself there must be something that makes this question more interesting than it seems

I scoff at your insistence that I ‘choose’ an urn, instead I will draw a dice and let it decide for me!

The dice chose C

Second thoughts on rational choices…

Rationality is noting that the expected return for each of the three

choices is identical. Therefore, it doesn’t matter which urn you

choose.

Given that, the urn you choose is a matter of taste. Trying to

argue about taste is futile. Attempting to analyze taste and

shoehorning it into rational/irrational is beyond futile.

“I like C.”

“If you like to gamble you should like A. If you don’t like to

gamble, you should like B. Therefore, you have bad taste.”

“But the expected return is identical. If I chose C because I like

its paint job, that’s just as rational as any other choice.”

Ron #45,

As Ken Arromdee notes in #3, one’s utility curve matters. Suppose John Doe has the choice of being given $100 or flipping a coin and either getting $0 or $200. Expected value is $100 either way. But, to take an extreme case for illustration, suppose Joe needs that $100 to survive — literally, to buy enough food and water to survive, and that $100 is sufficient to survive (and he has no other way of getting food and water or $100 to purchase them). He will probably have a strong (and rational) preference for being given the $100. That first $100 thus has much higher utility to him than the potential second $100. Equal expected value does not mean indifference between two such options (or preference based merely on “a matter of taste”/enjoyment of gambling). Utility curves underly why some (most) people rationally prefer the less risky option (because of diminishing marginal utility for each incremental unit of dollars or whatever).

Thomas Bayes in #17. You say that your proof of the inferiority of C does not use expected utility. It does, however, use the independence axiom, which is essentially equivalent to using expected utility.

Gordon / Brooks #46,

Yes, under some circumstances, the expected utility will call for

choice A or B. It’s my contention that not enough credibility has

been given to a flat curve. For instance, Bayes (#12) minimizes the

chance that the utility curve will be flat. I think that’s

incorrect. If you hand-wave away the curve that represents “I’d

sure love to win $1,000, but a free $100 wouldn’t be bad, either.”,

then your math leaves out what I feel is a very common case.

Both Powerball and Mega Millions lottery offer a giant but low odds

prize and a bunch of smaller ones. That’s urn C, and either an

awful lot of people are acting irrationally (always a possibility,

of course), or there are an awful lot of people with flat utility

curves. Powerball and Mega Millions make a mint by tailoring their

lotteries to this effect.

If my curve is flat, my choice is a matter of taste or whim.

I have, in the region of this example, a utility function with a positive first derivative and a negative second derivative.

I choose B.

I also insure my home and car.

Ron #48,

My point was simply that your comment #45 seems to imply that we should presume everyone has a flat utility curve other than, as you put it in #48, “a matter of taste or whim”. I wasn’t saying someone can’t have a flat utility curve over that range, only that we surely can’t presume everyone does.

I think we are all forgetting something. God loves us, so the odds of winning is actually higher than probability would dictate. Based on that, Urn A would be the best choice. However, god doesn’t love us sooo much as to guarantee that we’ll always win $1000, so we should pick C just in case he doesn’t love us. Therefore, final answer, choose C.

I, on the other hand, get an (enjoyable) adrenal rush whenever I defy the odds and win. The longer the odds, the bigger the rush, but money also has a diminishing marginal utility. The rush from a $1000 win is not ten times as great as than from a $100 win.

Quantifying this, if I have a probability p of obtaining X, and I actually obtain it, my utility is sqrt(X)/sqrt(p). My expected utility is therefore sqrt(X*p).

For A, this means my expected utility is sqrt(1/1000*1000)=1.

For B, it is sqrt(1/100*100), also 1.

For C, however, it is sqrt(1/2000*1000) + sqrt(1/200*100) which is sqrt(2).

Clearly, C is better for me in terms of expected utility.

@6 +1, @7 +1

Tomorrow, I have to finish a “double-or-nothing” toss with an acquaintance over a $500 debt I owe. I’m not sure yet how valuable your $1000 will be to me.

If I lose the toss tomorrow, I will desperately need to have won $1000 today – it would give me 11000 points worth of utility, but the $100 would give nothing.

If I win tomorrow, I’m indifferent between winning $100 or $1000 today. I’m careful with money (especially after all these reckless gambles), so both are far beyond my expected future lifetime consumption. Either would give me 1000 points worth of utility.

So, if I win tomorrow,

A is worth 0.001 x 1000 = 1

B is worth 0.02 x 1000 = 20

C is worth 0.011 x 1000 = 11

On the other hand, if I lose tomorrow,

A is worth 0.002 x 11000 = 22

B is worth 0.000 x 11000 = 0

C is worth 0.001 x 11000 = 11

Clearly, the minimax solution of choosing Urn C is the most cautious choice, since it guarantees an expected utility of 11 with no variance. I’m risk averse (especially after all these reckless gambles), so C is best.

@3: Few of us start from $0. So it’s not clear B is better, even with diminishing utility. My own instinct leans toward A. $100 will make almost no difference to me, but I would feel $1000.

I pick B, because blue is my favorite color. Since “diminishing utility” is irrelevant at the small amounts we are talking about here, and since I refuse to give in to my cognitive biases that severely diminish the psychological effect along the reward slope, I’m going with my favorite color.

Expected Utility says that:

For Urn 1: 1000(2/2000) = $1

For Urn 2: 100(20/2000) = $1

For Urn 3) 1000(1/2000) + 100(10/2000) = $1

So, as Steve says, the expected return is $1. Thus, going by Von Neumann / Morgenstern axioms, a person would be indifferent to all three urns.

So do you like many blue colors, or red colors? Too bad there isn’t any black colors on there – one of my favorite colors – so I would choose urn 1.

Apparently there is some confusion about expected utility. So for those who don’t know, it follows the same rules as mathematical expectation, but with utility values.

Thus it can be expressed as: (Utility of preference A) * (The probability of that preference occurring) + (Utility of preference B) * (The probability of that preference occurring) + … + (Utility of preference X) * (The probability of that preference occurring)

This is not to say that mathematical expectation and expected utility are the same thing: They can lead to different outputs. One uses a Utility function, the other doesn’t.

I choose urn B. It has the smallest variance so it is most likely to have a positive outcome.

Could we get some actual ECONOMICS into this discussion?

Urn A has 1998 black balls.

Urn B has 1980 black balls.

Urn C has 1989 black balls.

It’s a no-brainer: The economy was doing better in 1998 than in 1980 or 1989, so I prefer Urn A. Comparing the urns based on irrelevancies such as red balls and blue balls would be like comparing cats and dogs.

I am almost indifferent but I have enough money that I slightly prefer A over the others. One hundred bucks won’t make me really happy. If it was more like state lottery numbers and red was $10 million and blue was $1 million I would go for C.

Corrected!:

I am almost indifferent but I have enough money that I slightly prefer A over the others. One hundred bucks won’t make me really happy. If it was more like state lottery numbers and red was $10 million and blue was $1 million I would go for B.

@54

I like!

@54

Actually, that doesn’t help either.

If you lose tomorrow, U(1000) = 11000 and U(100) = 0

If you win tomorrow, U(1000) = U(100) = 1000

If you lose, payoffs are:

A: 2/2000 * 11000 = 11

B: 0

C: 1/2000 * 11000 = 5.5

If you win, payoffs are:

A: 2/2000 * 1000 = 1

B: 20/2000 * 1000 = 10

C: 11/2000 * 1000 = 5.5

Say probability of losing is p, probability of winning is q.

Expected payoffs are:

A: 11p + 1q

B: 0p + 10q

C: 5.5p + 5.5q

We need expected payoff of C to be greater than both A (condition 1) and B (condition 2)

condition 1:

5.5p + 5.5q > 11p + 1q

p 10q

p > 4.5/5.5 q

Condition 1 and condition 2 contradict.

We are only indifferent if:

p = 4.5/5.5 q

and

p + q = 1

implying,

q = 11/20 = 55%

p = 45%

That will be true in general.

Tomorrow, either P or Q can occur with probabilities p and q.

For conciseness, lets give variable names to the payoffs:

U(1000 | P) = K

U(100 | P) = L

U(1000 | Q) = M

U(100 | Q) = N

Expected payoffs are (for conciseness, I’ve multiplied by 2000):

A: 2pK + 2qM

B: 20pL + 20qN

C: pK + 10pL + qM + 10qN

Condition 1: C > A

pK + 10pL + qM + 10qN > 2pK + 2qM

p > q (M – 10N)/(10L – K)

Condition 2: C > B

pK + 10pL + qM + 10qN > 20pL + 20qN

p < q (M – 10N)/(10L – K)

Condition 1 and 2 contradict.

@54

You’ll have to excuse me, I took probability, but not game theory.

However, with my rudimentary knowledge of game theory (I can draw trees), I understand what you’re saying. This is how I see it:

I choose A, B, or C, and then player 2 chooses P or Q. The tree has six nodes, and suppose the payoffs are as you gave, and the game is zero game:

A -> P 11/-11

A -> Q 1/-1

B -> P 0/0

B -> Q 10/-10

C -> P 5.5/-5.5

C -> Q 5.5/-5.5

If I choose A, my friend will choose Q, and my payoff will be 1

If I choose B, my friend will choose P, and my payoff will be 0

If I choose C, then in any case, my payoff will be 5.5

My best choice is C.

I read somewhere (some behavioral economist, I think) that minimizing disappointment is better than maximizing outcome.

So, I’m for B.

I read somewhere (some behavioral economist, I think) that minimizing disappointment is better than maximizing outcome.

So, I’m for B.

@Joel #68 (in ref to #54), yes, I’m not trying to maximise my expected outcome tomorrow by choosing C, I’m trying to mitigate my worst case scenario.

There are several points of view here, I would definitely choose C as the probability of getting a prize is 0.55% with the possibility of the outcome being a $1000 prize (0.05%). Now in business everything you are not winning can be considered a loss, hence you are trading a 1% possibility of getting $100 (twice the chance of getting a prize from C) for a possible bigger “revenue”. Selecting A is absolutely ruled out since the chance of getting it is 0.10% which is way too low. Rationally thinking, since it doesn’t state this chance has cost anything to me, winning nothing doesn’t imply a problem, playing for something good or something better is the most logical solution.

i like to think of it like this. odds are pretty darn good im walking away from this game a loser. if i go a> i have a 1/1000 chance of a win. b> 1/100 and c>1/180. im going to maximize my chance of _any_ good outcome and go with b.