How rational are you? I once posted a test, based on ideas of the economist Maurice Allais, that most people seem to fail. Today’s test, based on ideas of the economist Dick Zeckhauser and the philosopher Richard Jeffrey, is one that almost everyone fails.

How rational are you? I once posted a test, based on ideas of the economist Maurice Allais, that most people seem to fail. Today’s test, based on ideas of the economist Dick Zeckhauser and the philosopher Richard Jeffrey, is one that almost everyone fails.

Suppose you’ve somehow found yourself in a game of Russian Roulette. Russian roulette is not, perhaps, the most rational of games to be playing in the first place, so let’s suppose you’ve been forced to play.

Question 1: At the moment, there are two bullets in the six-shooter pointed at your head. How much would you pay to remove both bullets and play with an empty chamber?

Question 2: At the moment, there are four bullets in the six-shooter. How much would you pay to remove one of them and play with a half-full chamber?

In case it’s hard for you to come up with specific numbers, let’s ask a simpler question:

The Big Question: Which would you pay more for — the right to remove two bullets out of two, or the right to remove one bullet out of four?

The question is to be answered on the assumption that you have no heirs you care about, so money has no value to you after you’re dead.

Almost everybody says they’d pay more in the first case than the second. Arguably, that means that in this scenario almost nobody is rational — because a rational person would give the same answer to both questions.

The reason for that is not immediately obvious. To understand it, you’ve got to think about four questions:

Question A: You’re playing with a six-shooter that contains two bullets. How much would you pay to remove them both? (This is the same as Question 1.)

Question B: You’re playing with a three-shooter that contains one bullet. How much would you pay to remove that bullet?

Question C: There’s a 50% chance you’ll be summarily executed and a 50% chance you’ll be forced to play Russian roulette with a three-shooter containing one bullet. How much would you pay to remove that bullet?

Question D: You’re playing with a six-shooter that contains four bullets. How much would you pay to remove one of them? (This is the same as Question 2.)

Now here comes the argument:

- In Questions A and B you are facing a 1/3 chance of death, and in each case you are offered the opportunity to escape that chance of death completely. Therefore they’re really the same question and they should have the same answer.

- In Question C, half the time you’re dead anyway. The other half the time you’re right back in Question B. So surely questions C and B should have the same answer.

- In Question D, there are three bullets that aren’t for sale. 50% of the time, one of those bullets will come up and you’re dead. The other 50% of the time, you’re playing Russian roulette with the three remaining chambers, one of which contains a bullet. Therefore Question D is exactly like Question C, and these questions should have the same answer.

Okay, then. If Questions A and B should have the same answer, and Questions B and C should have the same answer, and Questions C and D should have the same answer — then surely Questions A and D should have the same answer! But these, of course, are exactly the two questions we started with.

So. Did you pass the test? I for one did not. That leaves me (and also you, if you failed along with me) two options. Either we can maintain that there’s some flaw in the above argument — some way in which it fails to capture the “right” meaning of rationality — or we can conclude that we don’t always make good decisions, and that meditating on our failures can help us make better decisions in the future (including in situations more likely to arise than forced Russian Roulette). I am mostly in the latter camp. How about you?

If I were *forced* to play Russian Roulette I’d try to play it as a game of skill rather than a game of chance. So if there are two bullets, I’d put them next to each other in hopes that the weight makes it far more likely the spin stops with those two on the bottom (if it fires from the top). Or if it’s a gun that shoots from the bottom, I’d do the spin with the gun upside-down. But allowing for the fact that real-world weighted barrels produce a non-random spill totally messes up the odds computations…

http://www.listafterlist.com/tabid/57/listid/9552/Everything+Else/How+to+Win+at+Russian+Roulette.aspx

One important consideration is ignored in your line of argument: if there is a 50% chance of dying immediately, the money you have worth much less to you because there is a 50% chance you won’t have a chance to spend it. So the fourth bullet should be about twice as expensive compared to the first two (or rather you should be prepared to pay twice as much).

So by extension of this argument you should also pay the same amount to remove one bullet out of any gun with n slots and m bullets for any n and m.

In other words the marginal bullet has a fixed price equal to your total wealth. Weird.

Would another way of looking at this be to consider the payoff structure? If you consider life to have an infinite-value payoff and death to have a negative infinite-value payoff, you would pay any amount in either scenario. This is what occurred to me when I read your post. I guess you could adjust for that kind of consideration by saying it’s Russian roulette, but only aimed at your little toe.

Don’t you always spend 100% of your wealth once there is no bequest motive? So given the one choice for B you always pay the same as in A.

How would the answer change if you knew you would get the chance to, say, pay to remove a bullet (in either, or, both cases) after pulling the trigger once?

I know this kinda ruins the cool instrument that is this problem but…

I really enjoyed these questions, even though I “failed” the test. Furthermore, I think I follow the argument that you’ve presented for why a rational agent would be willing to pay equal amounts in the two cases.

But I don’t yet see the flaw in my argument for paying twice as much in the first scenario. Here’s the argument:

(1) Suppose you value your own life at V.

(2) The difference, in utility, between the two outcomes is equal to V.

(3) In Question 1, there is a 1/3 chance that you’ll die if you pay nothing, and a 0 chance you’ll die if you pay $.

(4) In Q1, paying $ reduces your chance of dying by 1/3.

(5) In Q1, not paying means you face a lottery with an expected value of V*2/3. Paying $ means you face a lottery with an expected value of V minus the payment itself, equal to $.

(6) In Q1, your alternatives are: V*2/3 if you don’t pay, and V-$ if you pay $.

(7) In Q1, V-$ is the better option if and only if $ is less than V*1/3.

(8) Therefore, a rational agent in your position would be willing to pay up to V/3 in Question 1.

(9) Now for Q2, the treatment of which ought to be symmetrical to that of Q1. In Q2, there is a 4/6=2/3 chance that you’ll die if you do nothing, and a 1/2 chance you’ll die if you pay up.

(10) In Q2, paying up reduces the chance you’ll die by 1/6.

(11) In Q2, paying nothing means you face a lottery with an expected value of V/3. Paying $ means that you face a lottery with an expected value of V/2-$.

(12) In Q2, your alternatives are: V/3 if you don’t pay, and V/2-$ if you pay $.

(13) In Q2, V/2-$ is the better alternative if and only if $ is less than V/6.

(14) Therefore, a rational agent in Q2 would be willing to pay up to V/6.

(15) Conclusion: A rational agent would be willing to pay more in Q1 than in Q2.

Nick, one word: inheritance. It is my understanding very few people spend their entire wealth when they found out, say, they have a terminal cancer — the tiny marginal benefit of going to America for treatment or whatever doesn’t justify the big marginal cost you’d impose on the ones you love.

I passed the test.

Why do most people fail the test? A probable source is the money illusion. In question 2 in both options there is a high probability you’ll be dead anyway, so money/utility ratio is very different than in the question 1. Most people fail to adjust for that.

It would be very interesting to find out how this works in the real world. We could encounter similar dilemmas in the context of terminal diseases and costly high-risk therapies among people with no bequest motive.

I think I object to the question B = question C step on the grounds that improving my odds in a game I am certian to play is more valueable than improving my odds on a game I have a 50% chance of playing.

Also I’m pretty sure (haven’t actually gone through the details) that you can use the logic that gets you to agree question B and question C have the same answer to “prove” that removing 2 bullets from a 6 shooter with 4 loaded is the same as removing 1 bullet from a 6 shooter with 4 loaded. If I bought that conclusion I’d have to concede that removing 1 bullet from a 6 shooter with 3 lopaded was valueless.

Even if you don’t have any heirs you care about, it would take a coldy rational person indeed to not care about how much money ends up in the hands of the people who forced you to play Russian Roulette.

Well being a poor student my answer was every dollar i have for both, i don’t have that much though. I am no sure that is a rational answer but it is what i would do. I don’t think i would have access to debt markets during this roulette game; maybe my captors have a visa machine, however. I would be willing to pay that amount to decrease my death to 1/2 from 2/3rds and certainly to 0 from 1/3. Hopefully I have a long life ahead of me so it would be worth it.

But I could see people paying more for the first based on some nonlinear or variance based preference function.

U(i)= i+(var(i))^2

Where “i” is the probability of death and we minimize the function.

U(4/6)= .71605

U(3/6)= .56250

U(2/4)= .38272

U(0) = 0

U(4/6)-U(3/6)= .15355

U(2/6)-U(0)= .38272

So our agent gets twice as much utility from moving to the zero prob than the half prob of death. I am considering these differences the relative prices people should pay in my competitive Russian Roulette market.

I know you will attack this function, my only point is that the assumption that we should value out own lives in some linear function is just as weak. There is no law physical law out there that states we should do that.

I have likely made some mistakes in the math, or calling this a “market,” forgive me i flew in on a red eye.

I take back part of my argument, not sure what part. If it was linear you would have to pay more for more bullets to be removed, or something like that.

I went for the “wrong” answer too.

There has been some argument that the death part of ther loss distorts the problem too much. What happens if we lower the odds a bit? Instead of pointing at your head, you point the revolver at your foot. If you pose the question this way, what answer would you get? I think most peoples gut reaction would be the same as the Russian rullette version.

How about putting it as a “willingness to accept”? Does it change the way the problem is perceived?

I could be persuaded to take on the possibility shooting myself in the foot for a large amount of money, say (for argument) $1 million for a gun with 2 bullets in it. How much less would I accept to remove both bullets? Well, obviously not all of it, as I would then be back in a position where I would not take the bet on in the first place, but it is presumably a shade under $1M. Now, how much would someone have to pay me to point a gun with 4 bullets in it? I say $2M, if I was prepared to take $1M for 2 bullets. How much to take one bullet away? Just about $0.5M, since the “price” is $2M for 4 bullets. So for Q1, I would accept about $1M less to remove 2 bullets. In Q2 I would accept about $0.5M to remove one of 4 bullets. Has my reasoning led me astray?

Lets lower the odds some more. How about pointing it at $100 bill (which you get to keep if it is not shot)? Well, how much would I pay to remove the 2 bullets in Q1? With 2 bullets, the $100 bill is worth an expected $66 (a 2/3 chance of getting it). With no bullets it is worth $100. So I will pay $33 to remove the 2 bullets.

With Q2 (4 bullets) the expectation from the $100 is $33 (2/3 chance of shooting it). With 3 bullets it is worth $50. So I will pay 66-50 = $16 to remove one bullet from 4. Why is this not the same? can someone explain?

In both cases, the probability of surviving is incremented by 50%, so — given that money has utility only if one is alive — the same amount should be offered.

Still, it’s not intuitive that one should bid the same to pass from 33% to 0% probability of dying, as to pass from 98% to 97%.

@Harold : the key is that if you are dead, money has no value to you. That’s where the analogy between Russian Roulette and your ‘games’ breaks down.

How about shooting yourself in the foot? Would you pay more to take aut 2 bullets from 2, or one bullet from 4? I would definately still have said 2 bullets from 2. How about you?

To ad to the last post I made (the details I didn’t give first time around). Sorry for the double post.

Question E: 50% chance you play with 3 holes and 50% chance you’re let free. How much will you pay to remove the bullet. If I buy the Question B = Question C logic then this should be the same as them.

Question F: How much would I pay to remove 1 bullet from a gun with 6 slots 1 of which is full. Clearly the same as question E.

So therefore I should pay the same to remove 2 of 2 bullets from a gun with 6 holes as 1 of 1 bullet from a gun with 6 holes.

But then what would I pay to remove 1 of 2 bullets from a gun with 6 holes? Apparently nothing as I’d still need to pay whatever we answered to question F (and A,B,C,D and E) to get back down to an empty gun.

I suppose one could argue that I’d be willing to pay anything to remove a single bullet from any gun so we’re dealing with infinities (be in infinite debt for life). But practically this is only the amount of value we’ll generate in the rest of our life (finite) so I’m not sure I buy that either.

@Harold: My 2 cents, our utility function is presumanly close to linear (locally)for $100, for the larger stakes I think it isn’t.

A dual problem: suppose that in the Russian Roulette scenario, the player is offered free life insurance, to be payed in case of death, in exchange to adding bullets to the revolver.

Question 1: How much life insurance would you accept to increase from 1 to 2 bullets in a 6-shots revolver ?

Question 2: How much life insurance would you accept to increase from 1 to 2 bullets in a 100-shots revolver ?

By the reasoning of the original problem, these 2 questions should have the same answer.

Do you play this game once or many times? If once, then I can see the answer being the same. If many times, then I see the greater value of the empty chamber.

Of course, the right answer is to pay to put more bullets in the gun, then shoot the people making you play.

Phil: Nothing weird about that. My initial answer to the two questions was “well, as little as they’d let me, but up to my total wealth if necessary”.

At the risk of sounding foolish…

I’m not convinced that paying more in Question 1 is irrational. In Question 2, my removing one bullet you lessen your chances of being shot by roughly 16%, and in Question 1 you reduce it by roughly 33%.

The margin is important.

Jonathan Kariv: Your Question E is not equivalent to the others, because in cases where you are let free, you realize a cost. The key to the original problem is that you should not care about scenarios where you would die anyway; this does not extend to imply that you would not care about scenarios where you are set free.

In general, the option to remove k1 bullets out of m1, in an n1-shooter is equivalent to the option to remove k2 bullets out of m2, in an n2 shooter if k1 / (n1-m1+k1) = k2 / (n2-m2+k2). This gives the % chance of death you are avoiding, after eliminating the scenarios where you’d “die anyway.”

I instinctively made the calculation the same way Michael did. Is there a reason that is wrong?

In Question 1: you’re paying to reduce your chance of death by 33%

In Question 2: you’re paying to reduce your chance of death by (4/6)-(3/6) = 17%

Therefore, question 1 would have to be worth more to me. Is there something wrong with that reasoning that I’m missing?

what if you’re playing both games at the same time with, say, two cats? which decision leads to less cat-death?

I think, as stated, this problem illustrates the principle of sunk costs. From a statistical point of view, B and C are different, but from a decision-making point of view, they’re the same.

I’m curious how utility of money after survival impacts this.

Jonathan Kariv: Jonathan Campbell has the exactly right, but let me reiterate:

The argument for the equivalence of B and C is that C looks exactly like B in every instance where your decision actually matters.

But in your Question E, there is a possibility you’ll be let free, in which case your decision *does* matter, because you prefer to be let free as a rich man than let free as a poor man. That’s exactly where the argument breaks down.

Peter Mansbridge: But I did not *assume* linear utility; I (essentially) proved it, from the equivalence of the four questions I listed.

So when you write:

the assumption that we should value out own lives in some linear function is just as weak. There is no law physical law out there that states we should do that.

this quite misses the point. No such assumption was made. This was a conclusion, not an assumption, and to reject the conclusion, you must reject one of the assumptions that got us there.

Keith: There are several errors in your reasoning; but the most important comes in Parts 11 and 12, where (given your framework) you should have written (V-$)/2, not V/2-$.

The more fundamental error is that instead of writing V-$, you should have written W, where W is the value of being alive but $ poorer; there’s no reason this needs to be the same as V-$. But that’s not the error that led you to the wrong conclusion. It’s the confusion between (V-$)/2 and V/2 – $ that led you astray.

Mark: What you’re missing is that in Question 1, you’re paying with money that you’re sure to miss, whereas in Question 2, you’re paying with money you might never miss anyway.

I think I agree with Jonathan Kariv and I also think you may be computing different chances than I am. Let me focus first on the question of whether B and C are equivalent as I think that’s part of the core question of “rational” decision-making.

I agree that half the time, questions B and C are the same. The issue, though, is “what are my chances of surviving the scenario as posed and how much am I improving my chances of survival?” Or put another way, what’s the overall value of the removal to me?

In scenario B I’m improving my chances of survival by 33%, to 100%. But in C I only get that improvement half the time, so I’m improving my chances of survival by 50% or 16.5% (I could argue either way). If I’m only getting half as much benefit from the scenario as a whole by removing that bullet, shouldn’t its value be half as much?

Alan Wexelblat:

If I’m only getting half as much benefit from the scenario as a whole by removing that bullet, shouldn’t its value be half as much?

The value of what you’re getting is reduced by half, but so is the value of the money you’re paying with, because there’s a 50% chance you’ll get hit by one of the three not-for-sale bullets, in which case you won’t be needing that money anyway.

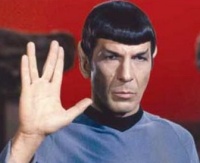

Great, and I was feeling so good about my Vulcan-esque lottery habits after passing the first rationality test.

Anyways, I remember a few commenters on the last post saying something along the lines of being ‘forced to gamble’ somehow changes your preferences. While that argument is rather weak, if I were in this position, the guarantee of not dying would definitely have an extra psychological bonus. I think if we reword the question so we have our piggy bank hanging over a cliff and we have to shoot at it, we would get more rational results.

Steve: This seems to me to be just another version of Allais’ question, or at least a question that relies on the same principle:

When deciding between choices A and B, it should not matter whether there is some probability that your choice will be rendered irrelevant and instead event C will be forced on you.

In this case, choice A is taking a 1/3 chance of being shot and killed by a gun, and choice B is paying a sum of money to have 0 chance of being shot. Event C is being shot.

Your whole argument depends on a hidden assumption that you will not care about what happens to your money if you are dead. There are lots of rational people who do write last wills, and who do care.

When you say people are not rational, it appears that you just mean that they do not necessarily make the same hidden assumptions that you think that they ought to be making. You have not really shown that anyone is irrational.

Jonathan Campbell:

When deciding between choices A and B, it should not matter whether there is some probability that your choice will be rendered irrelevant and instead event C will be forced on you.

Yes, this is exactly the principle that underlies both this and the Allais question.

In both cases, one is (implicitly) starting from the von Neumann/Morgenstern axioms for rational decisionmaking. The only one of those axioms that’s at all controversial is the one you cited; therefore that’s the one that’s going to underlie any paradox at all along these lines.

Steve: Thanks for the quick and concise reply. I’m having a hard time grasping how the expected value of the money applies. I thought expected value applied to situations in which you were computing the amount of goods/services I could purchase with a fixed dollar amount. In this scenario (C) there’s a 50% chance I will be able to purchase 0, regardless of how much money I have or have not paid. What am I missing?

Alan Wexelblat:

Try thinking of it this way:

A) How much would you pay for a Rolls Royce?

B) I might kill you this morning, or I might not. If I don’t (and life returns to normal), how much would you pay for a Rolls Royce?

The argument is that these two questions should have the same answer. Once I’ve decided not to kill you, the two questions look the same.

Now:

A) How much would you pay to avoid a 1/3 chance of death?

B) I might kill you with one of the three not-for-sale bullets, or I might not. If I don’t, how much would you pay to avoid a 1/3 chance of death?

These questions should also have the same answer, for the same reason as in the Rolls-Royce case.

Now when I ask you what you’re willing to pay to remove 1 bullet out of 4, I’m essentially asking Question B. I might kill you with one of the three not-for-sale bullets. Your answer is going to matter only if I don’t do that. But then you’re right back in the buying-your-way-out-of-a-1/3-chance-of-death case.

Steve: I think I understand the logic, but I’m struggling to accept it.

Here are some more questions:

1. You start with 6 bullets in the gun. Would you pay the same amount to remove 1 of them as you would to remove all of them?

2. Would you pay more to a) remove 3 bullets from a gun that has 3 to start; or b) remove 2 bullets from a gun that has 5 to start?

I think I know the answers to these, but I’m struggling to accept them. Your thoughts?

(These questions should be interesting unless I’ve screwed up my interpretation of the lesson, and/or I’ve flubbed the subsequent math.)

Thanks for another enlightening puzzle.

So this only works if the stakes are death? For shooting in the foot or $100 you are correct to pay more for Q1?

The key step is C, which says: There is a 50% chance of being executed, and a 50% chance of playing russian roulette with 1 bullet in a 3-shooter. The key is that you should pay the same for this bullet as for the 2 bullets in a 6-shooter, because if the 50% execution comes up, you don’t care anymore. Is this right?

If you were playing for shooting in the foot or $100 then you are right to pay more for Q1. Is this right?

Is this just a complicated way of saying that your life is worth more than you are able to pay for it?

OK, thanks, I think I’m getting the gist of the logic problem, combining your response with Jonathan Campbell’s comment. I might likewise add that there’s a .001% chance that an unstoppable bolt of lightning will kill me on the way to play the game and _that_ doesn’t affect my choices either. I now grok how your original problems B and C are equivalent, though I still don’t get how expected value of money plays into it. But I expect you have many other comments to answer, so feel free not to get diverted by this.

I don’t think there is a flaw in the argument, but the way execution is treated means an agent should pay the same amount no matter the reduction. If an agent plays the game with a uniform distribution instead of a partially loaded gun, an analogous argument would show the agent pays the same amount for a 100% reduction in the chance of death as an ε% reduction. Consider:

Question A: A number is drawn uniformly from (0,1). If the number is below one, you are shot. How much would you pay to change the threshold to zero?

Question B: A number is drawn uniformly from (1-ε,1). If the number is below one, you are shot. How much would you pay to change the threshold to 1-ε?

Question C: There’s a 1-ε% chance you’ll be summarily executed and a ε% chance you’ll be shot if a number drawn from (0,1) is below one. How much would you pay to change the threshold to zero?

Question D: A number is drawn uniformly from (0,1). If the number is below one, you are shot. How much would you pay to change the threshold to 1-ε?

If we assume continuity as ε goes to zero, the agent is indifferent between living and dying, which seems like something a rational agent has to accept.

The former camp.

All deliberate human action is rational. See Mises, _Human Action_, Chapter 1.

The expected value model does not capture how humans choose in the face of risk and/or uncertainty, neither in a descriptive nor prescriptive fashion.

I think Stefano raises an interesting point. By this logic, you should pay the same amount to remove 1 bullet from a 100-shooter containing 98 bullets. 97% of the time you’re dead anyway. If not, you raise your chance of survival from 67% to 100%, same as the original scenario.

The problem appears to be one of absolute vs. relative risk.

oops, missing word in the last clause:

“which *hardly* seems like something a rational agent has to accept.”

I got the wrong answer, but I changed my mind once the right answer was explained.

So I don’t think I was irrational. Just wrong.

@Steve

A) How much would you pay to avoid a 1/3 chance of death?

B) I might kill you with one of the three not-for-sale bullets, or I might not. If I don’t, how much would you pay to avoid a 1/3 chance of death?

These questions should also have the same answer, for the same reason as in the Rolls-Royce case.

I agree that these answers are the same, but these aren’t the same questions one and two are asking in the OP.

Question 1 asks: How much would you pay to avoid a 1/3 chance of death?

Question 2 asks: How much would yo pay to reduce a 2/3 chance of death to 1/2 chance of death?

While I understand your reasoning in the quote above, and in Questions A – D in the OP, I’m still not sure why I should expect a rational person to arrive at the same conclusion for Questions 1 and 2.

@Roger Schlafly:

“Your whole argument depends on a hidden assumption that you will not care about what happens to your money if you are dead. There are lots of rational people who do write last wills, and who do care.”

Yeah, that assumption is hidden right in the middle of the original post:

“The question is to be answered on the assumption that you have no heirs you care about, so money has no value to you after you’re dead.”

Why would you write something in such a hostile tone without even reading Steve’s post properly first?

Ok so to simplify the scenarios are

1) 3 chambers 1 bullets, remove one

2) N chambers N-2 bullets, remove one

Following your B=C argument, let me repartition 1) as 1a)

1a) either (1/3 dice roll) don’t play at all and you’re alive anyway, or (2/3) you’re playing Russian roulette with 2 chambers 1 bullet. Remove one?

By similar reasoning (just ignoring the ‘alive anyway’ case) this should be as valuable as just

1b) 2 chambers 1 bullet, remove one

which (by the above post’s logic) is as valuable as

2b) N chambers N-2 bullets, remove two

So equating 2) and 2b), if you have N chambers and N-2 bullets, you should pay as much to remove one as you would to remove two. Hmm.

And I believe similar equivalences would get you to the result that in an (N,M) game you should pay the same to remove K (>0) regardless of N,M,K. That can’t really be right. So where have I, or you, gone wrong?

The only place I can see is in the ignoring of special cases (you ignore the ‘dead anyway’ case, I’ve ignored an ‘alive anyway’ case). You are probably going to say your ignore is valid and mine isn’t. I think they are probably both invalid. Am I wrong?

Thanks,

There are two types of scenarios. In one set (questions 1, A, and B) you can pay to be free, while in the other set (2, C, D) you can only pay to lessen your chance of death.

By putting ourselves in the overstretched hypothetical situation, we try build out how this could work in the real world. For the first set, it easily boils down to a “Your money or (possibly) your life” decision, which is both possible and straightforward, though not usually involving Russian Roulette.

I think many of us balk at the second set because don’t trust the actors involved. Will I really have a better chance of living if I give them some of my money? Will they come back tomorrow with the same deal? Will I ever truly be safe? Now we move into “Don’t negotiate with terrorists” or resign ourselves to paying protection money. We may have to make these types of decisions too, but rarely are the percentages or the solutions clear-cut in real life.

I don’t think these two sets of scenarios are equivalent.

John Faben:

Thanks for pointing that out. That slipped by me on the first read too. (I know Steve is careful, so I should always reread his questions when I’m struggling with an issue like that.)

With this stipulation in mind, I’m no longer struggling to accept the answers to Steve’s questions, or to the ones I posed in an earlier post.

Here’s how I look at this problem now:

The expected cost associated with having to play with n bullets in the gun is:

E_n = n*Cd/6 + (6-n)*Cl/6,

where Cd is the cost of dying and Cl is the cost of living. (Presumably, Cd is positive and Cl is negative, but they don’t have to be.) If you pay a ransom of D dollars to change to m bullets, then the new expected cost is

R_m = m*Cd/6 + (6-m)*(Cl + D)/6

The threshold for D is obtained by setting E_n = R_m, which gives

D = (n-m)*(Cd-Cl)/(6-m).

If D is positive, then you’d pay the corresponding amount (or less) to change from n to m bullets. If D is negative, then you’d need to be paid a corresponding amount (or more) to change.

Some interesting things (I think):

The amount you would pay to switch from 2 bullets to 0 bullets is equal to the amount you would accept to switch from 2 bullets to 3 bullets.

The amount you would pay to reduce any number (1,2,…,6) of bullets from 6 is equal to the amount you would accept to switch from 0 bullets to 3 bullets, or from 2 bullets to 4 bullets, or from 4 bullets to 5 bullets.

But, then again, maybe I screwed up the logic or math. If so, someone will surely let me know.

Alan Wexelblat:

Expected value of money plays into it because there is a theorem that says that if you think about expected value of money (in the right way) then you will always get the right answer to problems like this. But getting the right answer is not the same thing as getting the right *insight*, and to get the right insight, you should probably ignore expected values entirely.

Thomas Bayes: On your question 1:

Yes, I would pay all of my net worth in either case. In both cases, I will surely die if I don’t pay up, so it would be irrational to refuse to pay anything less than everything I’ve got.

Of course, if I am trying to “buy off” the person who is making me play, then I may pretend that I’d pay him less to remove 1 than to remove 6, in order to encourage him to remove 6. But that is a separate question.

If it came down to it, and he said, “you cannot influence my decision; I have already decided how many i will remove if you pay me. How much will you pay me?” You really should, I believe, say the same number regardless of how many he might remove.

@Steve and Jon Campbell: Thanks, my earlier comments where complete nonsense when you phrase it that way.

This should probably be the point I stop talking but I’ll go on anyway. It seems we have generally shown that the amount of money we should be willing to pay to reduce the risk of death from p to p’ g(p,p’) is a function of (p-p’)/(1-p’). Intuitively I think g(p,p)=0 and g(p,p”)=g(p,p’)+g(p’,p”). Is this determine g uniquely? Does such a g exist?

Sonic Charmer: You can’t make “Alive anyway” arguments the same way you can make “Dead anyway” arguments. That’s because if you’re dead, the amount you’ve paid doesn’t matter — but if you’re alive, the amount you’ve paid affects your quality of life.

When playing poker or any game, it is rational (crazy not to) explore the motives of your opponent based on their actions/moves.

In question 1, I am being forced to play Russian Roulette by someone who is willing to give me chance not to die and therefore probably doesn’t really care whether or not I die. He is just looking for money. And since he is forcing me to play, he could presumably force me to continue playing.

The rational option would seem to be to offer $1 and then negotiate for what my opponent wanted.

In question 2, no matter what I do, my opponent wants me to play and risk death. This is most likely a sociopath who gets off on terrorizing his victims and who really wants me to pay to remove a bullet, thereby reducing the chance that I’ll die. I.e. increases the chance that he can play is psychotic game again.

The rational choice here seems to be to pretend to be suicidal and offer to pay him $ 10,000 to put 2 more bullets in the gun. Since the sociopath enjoys inflicting pain, I have increased my odds of being set free.

Jonathan Kariv:

On your “g(p,p”)=g(p,p’)+g(p’,p”)” hypothesis:

I see why you’ve written that, but here’s an example where that equation doesn’t work:

g(1,0) = my net worth

g(1,5/6) = my net worth

g(5/6,0) is probably not 0

The problem is that the g function must take into account how much money you currently have to spend, and if you calculate g(p,p”) in 2 steps, then the amount of money you have to spend changes after the 1st step, so the g function in the 2nd step is not the same as in the 1st.

Thinking through how much I should pay to remove bullets from the gun pointing at $100. If I paid $33 to remove the 1 bullet from 4, and the wrong option came up, I would lose the $100 plus the $33. Therefore I should only pay $16. With death, it doesn’t matter if I loose the “extra” money, so I should pay the same as in the 2 bullet case.

Jonathan Campbell:

Your statement “it would be irrational to refuse to pay anything less than everything I’ve got” makes good sense to me. But, if the logic and math I’ve been using is correct, then the threshold for what we’d pay (or accept) to change from n bullets to m bullets is

D = (n-m)*(Cd-Cl)/(6-m),

where Cd and Cl are the costs we associate with dying and living, respectively. We can think of this as a 6×6 array of numbers with positive numbers when n is larger than m, and negative numbers when n is smaller than m.

When n is equal to 6, then this threshold is equal to Cd-Cl. So when we say we would give everything we have for any reduction from 6 bullets, does this mean that Cd-Cl is equal to our net worth at that time, or does this mean that Cd-Cl is much larger than any amount of money we could imagine having?

More importantly, once we put a dollar amount on any switch from n to m bullets, we are setting a dollar amount on all of them. Based on some simple scenarios that I’ve played through, I can’t imagine anyone being comfortable with the complete 6×6 array of ‘rational’ thresholds unless Cd-Cl is equal to infinity. Many people, I believe, would not play with any number of bullets for any amount of money. But for people who would populate the matrix with numbers other than infinity and -infinity, I doubt they would be comfortable with the prescribed relationships that ‘rational’ thought would force between the dollar amounts.

This is probably a dilemma for which people don’t want to be rational.

At the risk of getting yelled at by Steve Landsburg, here’s my response:

Question C pits these two scenarios:

1) 50% of the time I’m going to die.

2) The other 50% of the time, I answer question B

Where as question B has only one scenario:

1) 100% of the time, I answer question B

I struggle to see how question B is the same as question C. They’re the same only 50% of the time. The other 50% I’m dead. I’m willing to pay a lot more to avoid question C.

Try thinking of it this way:

A) How much would you pay for a Rolls Royce?

B) I might kill you this morning, or I might not. If I don’t (and life returns to normal), how much would you pay for a Rolls Royce?

Ok. I think the question is which situation would you be willing to pay more to be in: A or B? And I think the rational answer is I’d pay more to be in situation A.

In reality the signs of my answer would be inverted. You would have to pay me very little to allow myself to be in situation A. But (unless you forced me) you’d have to pay me a *LOT* before I’d allow myself in situation B.

Perhaps you think that I should only be answering the question of how much I’d pay for a rolls royce. But when the original question asks “Which would you pay more for — the right to remove two bullets out of two, or the right to remove one bullet out of four?” I think its asking which situation I’d pay more to be in. And I think it’s rational to prefer being in the situation where you can guarantee survival.

dullgeek:

I try very hard never to be impatient with people who are asking honest questions. I do allow myself to get annoyed with people who just repeat the same thing over and over and over and over and over and over and over after (pretty) clearly not having bothered to read the various responses that explain the problem with what they’re saying. I see no reason to fear you’ll fall into that category.

You are certainly right that you’d pay more to be in situation A) than in situation B), but you haven’t been offered that choice. I claim the following things:

1) If you just look at Questions A and B (regarding the Rolls Royce) *as they are stated*, then they should have the same answer. If that’s not clear, I think Alan Wexelblat, in an earlier comment, said something that might help a lot: You’re on your way to buy the Rolls Royce. There is some chance you’ll be hit by lightning and killed on the way. Does that possibility affect what you’re willing to pay once you’ve safely reached the showroom? Surely not, because by then the threat of lightning has become irrelevant.

2) “How much would you pay for a Rolls Royce” is like “How much would you pay to escape a 1/3 chance of death?”. That should have the same answer as “How much would you pay to escape a 1/3 chance of death, given that you weren’t already struck by lightning this morning?”. That in turn should have the same answer as “How much would you pay to escape a 1/3 chance of death, given that you’ve managed to avoid being struck by one of the not-for-sale bullets?”. That in turn is the same question as “How much are you willing to pay up front to remove 1 of 4 bullets?” — because you’ll either be hit by one of the 3 not-for-sales, in which case you might as well have been hit by lightning, or you won’t be, in which case you’re buying your way out of a 1/3 chance of death.

Thomas Bayes:

In your framework, I think Cd-Cl is best thought of as the maximum amount of money that you could have access to in order to pay off the tormentor.

Given the assumption that “money has no value to you after you’re dead,” it immediately follows that Cd-Cl must not be anything less than the max amount of money you have access to. (If you start asking whether you’d be willing to steal money and so on, then you are essentially violating the spirit of this assumption, which is meant to suggest that once you’re dead, you no longer get utility from any money that you previously could have accessed.)

I think the original problem, stated as a dead-or-alive situation masks the point of the “paradox”. People are discussing how much life should be valued, or whether money has value once one’s dead.

Let me restate the problem in another form:

A) you have to choose between two tasks:

A1) the first task has 1% of probability of rendering you disabled for life

A2) the second task has 2% of prob. of disabling you, but in that case, you are given a large disability compensation (say $10,000,000)

B) you have to choose between another two tasks:

B1) 50% of probability of disabling you, no disability compensation

B2) 100% probability of disabling you, disability compensation as in A2

The paradox is that most people would choose A2 over A1 (given a large enough disability compensation), but B1 over B2; rationally they should choose either A1,B1 or A2,B2 since they are the same choice (you can ignore in the A case the 98% of times where you end up unscated)

Certainly I agree that the answer to the question of “how much would you be willing to pay for a rolls royce” should be the same whether I’ve gone through a life/death lottery or not.

But I’m not convinced that’s the question that’s being asked. Becuase that was just an analogy to the original question which is: Which would you pay more for — the right to remove two bullets out of two, or the right to remove one bullet out of four?

To me, the original question is comparing the situations and asking which I’d pay more for. Even if in both situations there is a common question, the price of each situation changes based on the differences between the situations.

I assume that’s what carries through to the rolls royce example, as it’s an analogy to the original question. Meaning that the rolls royce example is actually asking about which situation I’d rather be in. Otherwise, I’m not sure I understand how the rolls royce question is analogous to the original question. If they’re not analogous, then how is the answer to the rolls royce question instructive on the original question?

Steve, why doesn’t this argument degrade into a version of Pascal’s Wager? Similar to Sonic Charmer, we should be able to restate the conclusion as an equivalence between 3 chambers and 1 bullet and N chambers and N-2 bullets. This should hold regardless of the size of N — specifically, even if N is really, really big (similar to Blake R). So, what probability do you assign to the possibility that you are wrong about God, and really you have to donate a lot of your money to charity to avoid ceasing to exist when your body dies? Maybe 1 in 1e60 or so? Ok, let’s set N = 2e60. You will almost certainly cease to exist at the end of your life. But, you should still be willing to donate as much of your money to charity for that minuscule glimmer of hope as you would pay to take a bullet out of a three-chambered gun. Is there something wrong with this reasoning, or should your favorite charity expect a large donation?

Can I ask you this set of questions, which I think are analagous to the original question:

Suppose, like me, you really like Kung Pao Chicken. There are two restaurants that make equally tasty Kung Pao Chicken, and they charge the exact same price. Restaurant A requires that you go through a toll booth to get to it. Restaurant B is run by mass murder, and there’s a 50% chance he’ll put a flaverless and lethal poison in your food. Would you be willing to pay the toll?

If you say that you’d prefer to pay the toll, why is this different then the russian roulette example?

Restaurant A = question B

Restaurant B = question C

Kung Pao Price + toll = amount you’d pay to get rid of two of two bullets in a 6 shooter

Kung Pao Price alone = amount you’d pay to get rid of one of 4 bullets in a 6 shooter

@ Jonathan Campbell: You’ve got me there. Nice one.

Dullgeek:

Suppose I pose this problem:

I have two 3-shooters. One has three bullets in its three chambers. One has one bullet and two empty chambers.

First I will flip a coin to pick a 3-shooter to point at your head. Then, if it is the 2nd 3-shooter, I will ask how much you’d pay me to take the bullet out.

If I pick the “bad” 3-shooter, that’s rather like being struck by lightning. You’re dead, period. If I pick the good 3-shooter, you’re in the situation of buying your way out of a 1/3 chance of dying.

What’s that worth to you? Surely your answer should not depend on whether I ask you before or after I flip the coin. If I ask before I flip the coin, you might as well give the answer that you *know* you will want to give if and when the question becomes relevant.

Now my six – shooter is really two 3-shooters bundled together. When I spin the chamber, I’m flipping a coin — either it lands on one of the 3 not-for-sale chambers, which means I’m basically pointing a full 3-shooter at you, or it lands on one of the other 3, in which case I’m pointing a 1/3-loaded gun at you.

If I first the spin the chamber, and then tell you “Ah, it’s landed on one of chambers four, five and six — with one bullet and two empty”, there’s some amount you’re willing to pay for that bullet. That should be no different than the amount you’re willing to pay for that bullet *before* I spin the chamber.

Here is a little more on the issue I’m thinking about . . .

If I have this correct, the threshold for changing from an original number of bullets to a new number of bullets is equal to the difference between the cost you assign to dying and the cost you assign to living (Cd-Cl) multiplied by the appropriate number in this table:

http://dl.dropbox.com/u/1633558/Landsburg/rational_roulette/matrix.gif

A positive threshold means that you would pay to make the change, a

negative threshold means that you would need to be payed to make the

change.

If Cd-Cl is equal to the maximum amount of money that you have access to, then it appears that a rational person would play roulette with 4 bullets in the gun if they were paid twice this amount. Or they would only pay 1/6th of this amount to avoid playing with one bullet in the gun. I can’t imagine either of these being true.

As an example, suppose you have access to $600,000. You would pay all of this to get at least one bullet out of a fully loaded gun. Does that mean you would not pay more than $100,000 to avoid playing with a gun with one bullet? I doubt it. Does that mean that you would play with a gun with 4 bullets (rather than not play at all) if someone offered to pay you $1.2M? Probably not.

Let me put it another way. What is the maximum amount a person would pay to get a single bullet out of the gun? Is it also equal to their total worth? If it is, then they would pay their total worth in any scenario (because this is the scenario for which the would pay the least to change).

Now suppose someone said they would only pay $500K to avoid playing the game with one bullet. If they are rational, aren’t they now bound to set upper bounds on what they would pay in every other scenario based on my table? The max they would pay to avoid playing with 3 bullets would be equal to $1.5M, and the max they would pay to avoid playing with 6 bullets would be equal to $3M.

I’m struggling to figure out how to associate actual numbers to any scenario when the answer to the six bullets in the gun question would be “all of the money I can find.” The only number I can assign to Cd-Cl that doesn’t seem to create contradictions is infinity. Can someone help me with this?

(By the way . . . is there a way to embed a gif into a response rather than provide a link?)

dullgeek:

Your restaurant example differs from the Russian Roulette example in several ways, of which the first is this: In the RR example, there are two separate situations, and you’re asked, separately, what you’d do in each of them. In your restaurant example (if I understand it correctly) you are choosing which restaurant to go to, which is like choosing which gun gets pointed at your head. You don’t have that option in the original question.

Thomas Bayes—

Without having looked at your table —

It is a theorem that people who obey the von Neumann/Morgenstern rationality axioms act as if they were maximizing expected utility.

This means that there is some function U such that: When the gun has m chambers filled, you will be willing to pay $x for n of the bullets if and only

U(I-x) (6-m+n)/6 > U(I) (6-m)/6 (where I is your current wealth, and where the utility of death is normalized to zero)

So the amount x that you’re just willing to pay is achieved by changing the above inequality to an equality and solving for x.

The amount y I’d have to pay you to let me *add* n bullets to a gun with m is defined by

U(I+y)(6-m)/6 = U(I)(6-m+n)/6

Does this help?

John Faben says: “Why would you write something in such a hostile tone”

Because Steve obviously likes these trick questions that sucker people

into an answer that he claims is irrational. But the trick here is almost entirely in the misleading way in which the problem is phrased. It does not show that anyone is irrational. It only shows that you can fool people by hiding assumptions. Ask the question in a more objective way, and you will get a better idea whether people are irrational.

Steve:

Thanks! My table and analysis were based on the assumption that the utility function is linear. In your notation, I assumed that the utility function was

U(I-x) = I-x.

I’m not sure why I did this because I don’t see any reason for it to be. Of course, any things we can say about these questions that are true in general will be true for a linear utility function, but I think my concerns were specific to a linear utility function.

I’ll think about the more general utility function and see if I still feel uneasy.

Thanks for the help.

How about this point of view: you are essentialy buying a chance to live. It may help to start from the virtual situation that your are “dead already” and you are buying the chance to “undead” yourself. Then I guess you have to consider the value you assign to the rest of your life, given the portion of your wealth you are able to carry through the russian roulette. This would be a utility function of course, although for most people not too sensitive to the money left (I very much like to live, rich or poor – but people differ).

The “independent” (on my actions) part of the choice should be always removed (substracted) first.

The original question “how much would you pay” is still not totally clear to me though. It would help to see some model for dealing with the counterparty. What makes them accept or refuse my offer? How much is removing one cartridge worth to them? It is a transaction and I have difficulty imagine how it would work.

Ok. This seems to be an issue of how the original question is interpreted. You say, “…which is like choosing which gun gets pointed at your head. You don’t have that option in the original question.” I don’t understand why. The original question is:

Which would you pay more for — the right to remove two bullets out of two, or the right to remove one bullet out of four?

How is that not choosing which gun will be pointed at my head? Why would I ever pay more for a right to remove 2 out of 2 if that wasn’t going to exclude the 1 out of 4 gun from being pointed at my head and fired?

I’m having a hard time imagining another way to interpret the question. But that’s proabably a function of my poor imagination. Can you help me understand how I should interpret this question?

Which leads me to this question that I hope is not too far afield from the original point of your post: Isn’t the correct answer to this question (as well as the google question) a function of how the reader interprets it? And is it fair to call the reader irrational for providing an answer to a question they interepreted differently than intended? Wouldn’t you expect irrational answers to trick questions?

Steve – love the explanation involving the two six-shooters and the coin-flip, really manages to get across the essence of the problem.

I think what most people are struggling with is the fact that, obviously, we would prefer the coin to come up heads (ie, to pick the not-full shooter) if we had a choice, but we aren’t given any power to affect the outcome of the coin-flip.

The warden’s gun is at your head, and your life flashes before your eyes – you realise that if you escape alive today, your life has a value of L to you. You may not have this money, but that is what you’d be willing to pay to save yourself from certain death. L may even involve other things besides money – slavery, indebtedness, whatever – but pay more than L, and you’d rather be dead. Pay exactly L, and you’re indifferent.

The gun has a probablity of p of killing you. The chance you’ll live is q=1-p. In this situation, your life is worth only q x L.

Then – a sunbeam of hope – your evil captor sneers and says “how much will you pay to reduce p by d?”

Suppose you pay P. If you die, it’s all the same. If you live, your life is now worth L-P. Your captor removes some bullets, and chance of surviving changes to q+d. Now your life is worth (q+d) x (L-P). Are you better off?

Well, you are if q x L < (q+d) x (L-P), that is, if P < dL/(q+d).

if q=2/3 and d=1/3, this gives P < L/3.

if q=1/3 and d=1/6, this gives P < L/3.

Hence removing 1 bullet from 4 is as valuable as removing 2 from 2.

If this seems counterintuitive, it's because we don't understand the situation. There's an evil mad prison warden pointing a gun at your head, for goodness' sake! How desperate are you??

The analysis I gave above says – “rational people grasp at straws when they are desperate”. It says “a person who faces a 2/3 chance of death will pay the same amount as a person facing a 1/3 chance, for only half the amount of reduction in their probability of dying”.

In fact, real people do grasp at straws. When told “you will die of this disease unless you have it treated” they will sell their worldly goods and plunge themselves into debt for treatments that have only a small chance of preserving their life. They could have achieved the same probability reduction my eating fewer hamburgers a decade earlier, but they weren’t desperate then. Are they irrational? The analysis here says “not necessarily”. Rational people pay more when they are desperate.

Finally, here’s a little puzzle. What’s wrong with this argument?

“Mike H is wrong! His formula says that to remove one bullet from 4, a rational person would pay L/3, and to remove one bullet from three, they’d pay L/4. Therefore to remove two bullets from 4, they should pay 7L/12. However, his formula says that to remove 2 bullets from 4, the rational person would pay L/2.”

I know some readers here have already got the answer.

Steve – thanks for the explanation- I understand now & agree. I also agree with those who say that this isn’t quite a good test of rationality, as (although the assumptions were stated upfront) they may/may not be intuitive or have parallels in regular experience, so taking them on-board is just tricky.

Anyway I agree with the result now and note that the amount you should pay only depends on the fraction (#bullets to be removed)/(#empty chambers that would result). So this maps all such games onto the rationals between [0,1]. Also seems to me (from thinking through some examples) that the ‘how much would you pay’ function is continuous and increases from 0 to, I guess, infinity (or at least, your net worth), as a function of p/q. For whatever reason thinking of the result this way makes it far more intuitive and less surprising!

John,

You have no chance of affecting the outcome of the coin-flip. However, you might have a chance to affect what your captor does.

What seems rational is to try an come up with possible psychological profiles based on how you are being forced to play Russian Roulette. Based on the most likely profiles, you would then want to offer the amount of money that would affect your freedom.

I would be willing to be $ 5 (to one person) that an FBI Profiler would say that you should pay more in Question 1 ($ a lot) vs. Question 2 ( $0).

Any other course of action seems irrational.

Okay, is this the idea?

We associate the utility of U(Y) to having Y dollars and being alive, and we associate the utility of 0 to having Y dollars and being dead.

If we are alive with some number of dollars (I), then the threshold for changing from having to play with n bullets in the gun to having to play with m bullets in the gun is

U(I-x)/U(I) = (6-n)/(6-m),

where x is the amount of money we have to pay (or be payed if it is negative) to make the switch from n to m bullets.

If we call f(x) = U(I-x)/U(I), then we can use this table to determine the threshold value for f(x):

http://dl.dropbox.com/u/1633558/Landsburg/rational_roulette/matrix_2.gif

Unless the utility function is linear, about the only definitive thing we can say about this problem is that several of the options have the same utility threshold, right? For example:

2 bullets to 0 bullets = 4 bullets to 3

3 bullets to 0 bullets = 4 bullets to 2 bullets = 5 bullets to 4

4 bullets to 0 bullets = 5 bullets to 3 bullets

6 bullets to 5 bullets = 6 bullets to anything less than 6 bullets

Steve,

Ya, i took back my argument about linearity, it is right after my first post. I just wasn’t completely sure where/ how i went wrong.

I guess i am comparing life to an investment, many would agree we want high returns (life) and less variance (less or actually in a funny way more bullets in the gun, its a binomial outcome). If i met someone saying they were getting 20 percent returns on their investments, i would be very skeptical of the level of risk they were using. for example i would consider putting all one’s available capital into one asset irrational, maybe not in your strict sense but it is most likely a bad idea.It seems many wall street investment banks ran into this problem. Is there no argument that the outcome with less variance (no bullets) is better more so because of the lowered variance and not only the lower prob of death?

On the other hand if an investor has no risk they wouldn’t get a return. that seems like a bad idea, so maybe some risk (bullets in the chamber) is good. I am going to guess you will argue i am changing your argument, but this makes it more interesting to me. this last paragraph was an epiphany after writing the second. Forgive me is undergrad.

Ok, I feel i may have a better argument.

Imagine you are in a store that sells 2 things, one you have to buy.

1) A gun with 0 bullets in the chamber, where you have to play Russian Roulette with it.

2) A gun where there are 3 out of 6 bullets in the chamber, where you have to play Russian Roulette with it.

You go into the store and there are set prices. Is there any way a rational person wouldn’t pay some marginal amount for case #1?

I see three choices/cases:

A) Case 1 Case 2 – I would have to think about it, if 1 was just a little more expensive i may opt for 1 but at some point i would probably choose 2.

This may be twisting your argument, I don’t really see where the important difference is though. My guess is it has to do with the removal of the bullets.

Let me suggest one facing this question just ask himself: ‘By how much will I increase my probability of living?’

In both cases 1&2 the answer is 50%. Hence, one would pay just as much for either. Also, one would pay more to make that percentage bigger.

It seems to me that this way of phrasing it removes anything counterintuitive or seemingly ‘paradoxical’. Indeed, the two cases could have been phrased in terms of their before/after # of empty chambers rather than of bullets, and I believe more people would have gotten it correct.

If so, it would buttress the case for people being confused/misled by the way the problem was stated, rather than that people are irrational.

B and C got cut off… sorry for the spam

B) Case 1 = Case 2 in price – I buy 1 and am happyto be alive for the same price as a 50 50 shot at death

C Case 1 > Case 2 – I would have to choose based on how much more expensive 1 is.

Steven,

I see the error now in parts 11 and 12 now. Given your correction, the maximum payment in either case is V/3. Thanks!

And thanks, too, for pointing out that the value of some composite thing (e.g. being alive and having X dollars) is not necessarily equal to the sum of the values of those two things.

Dullgeek:

Ah. I see what you misunderstood. I’m sorry I wasn’t more careful to avoid the potential for this confusion.

There are two separate questions here. One is “If the two-bullet gun is pointed at your head, how much would you pay to remove both bullets?” and “If the four-bullet gun is pointed at your head, how much would you pay to remove one bullet?” The intent of the question was never to imagine that you’d be in both situations. It was what would you did if *this* were the situation, and what would you do if this *other* thing were the situation instead?

Of course it is not fair to call a reader irrational for failing to understand the question (and when I say “failing”, I don’t mean to imply that the failure is on the part of the reader). But most people seem to have interpreted the question as I intended it, and for those people the analysis stands.

Thomas Bayes:

Okay, is this the idea?

What you’ve written looks exactly right to me.

What I always stress to my students, though, is that these calculations with expected utility threaten to obscure an important point: We do not assume that people are expected-utility maximizers. Instead, we conclude that they are expected utility maximizers (given the von Neumann/Morgenstern axioms). But all of the consequences of expected-utility maximization can be proved directly from the axioms without ever mentioning expected utility — and in many cases, you’ll get a whole lot more insight that way.

I get easily confused when we talk about rationality. I would tend towards, “some way in which it fails to capture the ‘right’ meaning of rationality” but might be convinced of, “we don’t always make good decisions, and that meditating on our failures can help us make better decisions in the future”

I certainly have made some bad choices. Does that mean I wasn’t rational? I’m not so sure.

Sometimes I make a choice that I regret immediately, but then later figure out it was the best choice. Sometimes I never regret it, but still conclude at some point it wasn’t the best choice.

I think the answer lies in there somewhere and also in the assumptions “The question is to be answered on the assumption that you have no heirs you care about, so money has no value to you after you’re dead.” No matter how many things we try to hold equal, we can never hold it all equal.

Just a quick check to see I have not got completely the wrong end of the stick. For a penalty less than death, it is rational to pay less for Q2 (1 bullet from 4). This is because if one of the 3 “not for sale” bullets comes up, you will have to pay the penalty, and you will have to pay the amount to take out the bullet. For a penalty less than death, you will care about paying the amount to take out the bullet. If the penalty is death, then you will not care about paying the cost to remove a bullet as you are dead. Therefore it is rational to pay the same for Q2 as for Q1 only if the penalty is death.

This is counter-intuitive because for almost all cases (except death) you would be wrong to pay the same. You have to check out the consequences in this special case to see the point.

Okay I think I get it all now. If you are being held at gunpoint and offered an alternative, any alternative, to reduce your risk of death, you should, by this logic, take it and pay all your wealth for it.

(Or is there a claim that this logic only works for these magic numbers, and not for any n slots and m bullets? I think it all reduces to the same thing. Just ignore m-2 bullets essentially and you are back in the same logic.)

The implications for government are painful, n’est-ce pas? If we are being held hostage by government, we should, by this logic, be willing to give up all our wealth for the slightest chance at some freedom. And so the government grows some more…

Phil: “Okay I think I get it all now. If you are being held at gunpoint and offered an alternative, any alternative, to reduce your risk of death, you should, by this logic, take it and pay all your wealth for it.”

No, this is definitely not the point of the example. For example, if I point a gun with 1,000,000 barrels and one bullet at you, you definitely shouldn’t be willing to pay me all of your net worth to remove the one bullet – that gives you a certainty of living as a poor man, rather than a 999,999 in a million chance of living as a rich man. It is perfectly rational to prefer the latter (and we all make decisions every day that imply that we do, in fact, prefer the latter).

The point of the example is that you can ignore the cases where you are dead anyway, because money has no worth when you are dead. Money definitely does have worth when you are alive.

Harold: you have this right.

Phil:

If you are being held at gunpoint and offered an alternative, any alternative, to reduce your risk of death, you should, by this logic, take it and pay all your wealth for it.

There might be some people willing to do this, but I doubt there are many. Surely it’s not required by rationality; if it were, we’d all spend all our wealth adding safety features to our cars.

Steve: yes, exactly… the fact that we don’t is exactly what your blog post is about, the gap between “rational” and actual. No?

I am glad to have this sorted. I am interested in posing it as a willingness to accept problem. It seems reasonable that people would accept a large amount of money to accept a small risk of death. We all do this when we go to work. If an individual would accept $10M for Q1, i.e. a 1/3 chance of death, then how much (logically) should he accept for a 2/3 chance of death? (the start position for Q2). This can’t be twice the 1/3 chance, or it would imply for $30M he would accept being killed, which is absurd.

Phil Maymin: Are you claiming that it somehow follows from the assumption of rationality that one should be willing to spend any amount to reduce the risk of death by any amount? If this were the case, it would surely mean there’s something wrong with our definition of rationality. What makes you think it’s the case?

Phil Maymin:

To follow up: All I’ve used is this: If you are willing to pay $x to reduce your risk of death from y to z in one situation, then you should be willing to pay $x to reduce your risk of death from y to z in some other situation. From this we learn pretty much nothing at all about how much you should be willing to pay to reduce your risk of death from v to w.

Steve: If there are 100 slots and 14 bullets, and you’re offered a choice to remove two bullets, can’t we decompose that using your method? So, 8 of those bullets remain, and you are exposed to that risk no matter what, but you are effectively offered the choice of removing two out of the other six. So in that sub-situation where neither those 8 bullets nor the 86 empty slots come up, you face the exact same choice as before. Hence you’d pay the same $x in this case.

Am I missing something obvious?

This whole thing has a kind of Monty Hall-ish feel to it, like some kind of macabre game show.

Speaking of irrational: Thank you for the free education.

…and by “free” I mean exclusively non-monetary. Of course, TANSTAAFL and understanding this certainly involved cost.

Phil Maymin: If there are 100 slots and 14 bullets, and you’re offered the choice to remove two … you are decomposing this (I think) into the following:

A) 94 chambers, of which 12 are filled

B) 6 chambers, of which 6 are filled

and you’re saying (I think) two things that are both wrong:

1) You’re saying that my willingness to pay to remove two bullets in this situation should be the same as my willingness to remove two bullets from a filled six-shooter, because my choice only matters when one of the B-chambers comes up. This is wrong, because my choice also matters when an A-chamber comes up — since some of the A-chambers leave me alive, and caring how much money I’ve spent. The only way to render the A-chambers irrelevant is to fill all of them with bullets, so that if one of them comes up, it doesn’t matter what I’ve paid.

2) You seem to be saying that removing two bullets from a filled six-shooter is the same as one of the situations in the original problem — but in the original problem, you were removing two bullets from a six-shooter with just two bullets in it.

Steve,

Thanks for putting the role of expected utility in context. I’ll keep that in mind when I think about these problems.

You said that you didn’t pass the test at first. Can you share some of the details about that? What mistake in rational reasoning did you make?

If we had a 100 chamber gun, we need 98 bullets in it. We should then pay the same for 1 bullet to be removed as for a 6 shooter with 2 bullets.

I haven’t read any of the discussion. Just ignore me if this has been adressed. But isn’t this just false:

“In Question C, half the time you’re dead anyway. The other half the time you’re right back in Question B. So surely questions C and B should have the same answer.”

Lets say you were dead 99.9999% percent of the time, and otherwise you have a bullet in the gun or whatever it was. Now, you wouldn’t pay very much, let alone the same amount, to remove that bullet, since it probably won’t matter anyway.

Steve,

Can you let me know if the following is an interesting example of symmetry or an obvious observation that any simpleton would see off the bat?

The “game” seems to be this:

A rational captor is forcing a rational victim to play 1 round of Russian Roulette in a effort to get money from the victim.

The rational victim being rational is not suicidal and likes having wealth. I.e. the positive outcomes are living and keeping as much money as possible. Also, dying “costs” more than loosing money.

The captor being rational is adverse to seeing someone killing himself and likes having wealth. I.e. the positive outcomes are peace of mind and getting as much money as possible.

Question 1

Victim Pays:

Captor has 100% chance of peace of mind, 100% chance of getting money.

Victim has 100% chance of life, 100% chance of loosing money.

Victim doesn’t pay:

Captor has a 66% chance of peace of mind, 0% chance of getting money.

Victim has a 66% chance of life, 0% chance of loosing money.

Question 2

Victim pays:

Captor has 50% chance of peace of mind, 100% chance of getting money.

Victim has 50% chance of life, 100% chance of loosing money.

Victim doesn’t pay:

Captor has a 33% chance of peace of mind, 0% chance of getting money.

victim has a 33% chance of life, 0% chance of loosing money.

In both questions, the victim is trying to find the cost of peace of mind of the captor. Let’s call this $POM. At this point, $POM seems to be the same in both questions using your argument.

Or have I misunderstood the game.

I believe this is the general rule for deciding if two actions are rationally equivalent.

P1 is the probability that you live in game one.

P2 is the probability that you live in game two.

If you assign 0 utility to being dead, then the amount you should be willing to pay (or be payed) to switch from game one to game two is determined by the ratio P1/P2. For any scenario in which this ratio is equal, your threshold for switching should be the same.

Scenario A:

Game one: 2 bullets in a 6 shooter (P1 = 4/6)

Game two: 0 bullets in a 6 shooter (P2 = 6/6)

P1/P2 = 2/3

Scenario B:

Game one: 4 bullets in a 6 shooter (P1 = 2/6)

Game two: 3 bullets in a 6 shooter (P2 = 3/6)

P1/P2 = 2/3

Scenario C:

Game one: 14 bullets in a 100 shooter (P1 = 86/100)

Game two: 12 bullets in a 100 shooter (P2 = 88/100)

P1/P2 = 86/88

Scenarios A and B will should be the same. Scenario C is not like them. If fact, because P1 is greater than 2/3, there is no Game Two that could make Scenario C the same as A or B.

Does this make sense, or do I have this goofed up?

Steve:

Is this accurate?

A rational person who is willing to pay $100,000 to remove 2 bullets from a six-shooter containing 2 bullets (Question 1) might not be willing to pay $80,000 to remove 1 bullet from a six-shooter containing 4 bullets (Question 2).

It seems to me that this is true unless there are restrictions imposed on the way in which a rational person allocates utility.

Jonatan:

Keep in mind that you’re paying with money that you probably won’t need anyway.

The point is to ignore those cases where your decision doesn’t matter and to focus on those cases where it does matter. IN those cases where it matters, you’re facing exactly the same old situation.

Oh yeah. What a neat problem.

I first thought that if you were in a country where people would come along giving you these sort of deals all the time, it would be different. But they both increase your overall chance of survival by 1/3.

I got it wrong. Beautiful puzzle.

Several people are tantalizingly close to the elegant explanation.

For those who still don’t see it, try this: What would you pay for a 50% increase in the probability you survive until tomorrow?

Does it matter whether p(survival) is .666, .333, or .0001?

It must be expressed in relative, not absolute terms. Ryan S had the right idea with the 98 vs 97 bullets example. Alex, in one of his posts, captured the framing in terms of survival, but used absolute instead of relative improvement.

A different take for those who can’t assign Utils to not dying.

You’re on a game show. You can’t leave with negative money. There are 3 doors, 2 with $6,000 cash, 1 with a goat (worth nothing…). You can pick a door, but first you can offer the host $x from your winnings (currently $0) to replace the goat with the prize. What do you offer?

Same situation, 6 doors, 2 prizes, 4 goats. What do you offer from your winnings to replace 1 goat with a prize?

100 doors, 2 prizes, 98 goats. What do you offer to replace 1 goat with a prize?

Michael Dekker: Great! An example where you still don’t care if the “wrong” door is opened, because the money you pay comes from your winnings. You only have to actually pay if the “right” answer comes up.

How many people would give an inconsistent answer to Michael Dekker’s question? Having spent time thinking about the Russian Roulette problem, it seems obvious. But is that because I have been thinking about it, or because when the question is posed in this way, it *is* more obvious? It is forcing us to think about the “empty chambers” rather than the full ones.

Interesting. I got it right via this perhaps less exact reasoning: I will pay what I want to control the risk I can control. In the experiment I cannot control the risk that an asteroid will hit me, or that the experimenter will kill me anyway. (I got the other rationality test wrong though). I confess I did not get far enough to notice 2/6 = 1/3, just that I could eliminate all the risk under my control. Had the second gun been a 7 shooter I would have said the same thing. Would that count as failure?

I have always argued these little gedanaken experiments are fun but prove less than they are claimed to, because people can learn. A gut reaction is not always right, but so what?

Ken B: